It’s colo time baby! #

So for a while now I have been toying with the idea of putting my own hardware up in ’the cloud’ but due to the enormous prices for a homelabber I decided against it many times but now, however, I found a deal that was too good to pass up (considering UK/EUR pricing) and pulled the trigger.

Why on earth do you need to colo? #

So as happy as I am running all my services at home in my lab I have had difficulty at times with uptime for things I actually care about. Take this blog for instance, at the time of writing this post, this blog lives on a borrowed server because moving house has meant I have had to completely take everything offline, move, and ensure everything works as it did before. Having services like this blog in a colo alleviates a lot of this. (By the time you guys are reading this all those 1s and 0s should be coming from my colo!)

Another reason I have wanted to do this is to finally stop using my home connection for streaming. I love and use Subsonic for streaming my music to all of my devices and although my home connection has been fine for this, having it in a datacenter with a 1Gb pipe is a serious improvement and should yield a noticeable difference when streaming over LTE and when I’m out of the country. Is it worth paying all this money for ever so slightly quicker music streaming? No, however, I can do so much more than just this.

One final reason I will give you guys to justify this to you (well, me) is that I am planning some projects for the good folks over at /r/homelab and need somewhere solid to host this stuff, this is the perfect opportunity for such a project.

The offer and the plan #

So after emailing what seems like almost 100 different datacenters and getting quotes for literally 1000s of pounds (GBP) or receiving very reasonable quotes only to find that they wanted to charge me bandwidth by the motherfucking megabyte I finally found something that seemed like it could be a done deal.

For a very reasonable pre negotiated price I get:

- 3 Amps of power (at 230v, obviously)

- 5x IPv4 address (Expandable)

- 10x /64 IPv6 blocks (Awesome!)

- 2x 1/2U slot servers

- 1Gb uplink

- 20TB of bandwidth

- 10x sessions per month of remote hands support

- 24/7 access

To me, that’s a pretty good deal. I managed to split the costs with a friend so it works out with each of us having 10TB of bandwidth and our own server, sweet!

So.. as soon as this was arranged I got an account opened and started planning.

Thankfully, due to my tech hoarding, I have loads of servers ready to be configured for this project. After taking inventory of all of my servers it came down to the following hosts:

- Dell R710

- DL380 G6

- DL380 G7

- DL360 G6

- DL360 G7

- Some SUN Shit

After some deliberation I decided to go with the DL360 G7, I like the form factor and although I have less storage for it compared to the R710 I just generally prefer HP gear, plus my other homelab servers are all HP so this just seemed fitting.

The plan was to run this guy as an ESXi host running VMs for everything I want to host, one of these being pfSense. Virtualised pfSense is great if done correctly and it allows me to keep everything on the one host. Adding an IPSEC link back to my main lab (now known as DC1) lets me route everything normally and have this setup as seamless as possible.

The hardware #

So after taking a dip in my various boxes of goodies I decided to deck this thing out with 2x x5650s with 3x 16GB RDIMMs on each CPU. This gives me in total 12 cores with 24 threads of processing power alongside 96GB of DDR3 RDIMM memory. Pretty sweet. I could have gone all out with x5690s and 288GB like my main host but there really is no need, this should be more than enough and if I ever need more I can either replace the host with something more powerful or just do some upgrades.

For storage, I was a bit strapped for disks. At first, I thought I didn’t even have enough to fill the damn thing but after looking in more boxes I found more than enough. I have decided to fill 6 of the 8 slots with 300GB SAS disks in a RAID10, this will be for VM storage. The remaining 2x slots have 900GB SAS drives in a RAID1 for general storage of other stuff, like my music. Eventually, I want to fill it with 1TB SAS disks but for now, this does the job.

PSUs will be standard 2x 480w PSUs that will be both connected in the DC on different phases for redundancy. Here are some pictures of it all ready to go:

Networking #

Ah, networking, the best bit of this all!

After installing ESXi I installed the pfSense VM that will do the routeing on this machine internally and to/from the WAN. I was given my public IPs when I signed up and set this as the WAN IP with my subsequent IPv4s as virtual IPs on the interface, this allows me to use whichever IP I want for whatever I want.

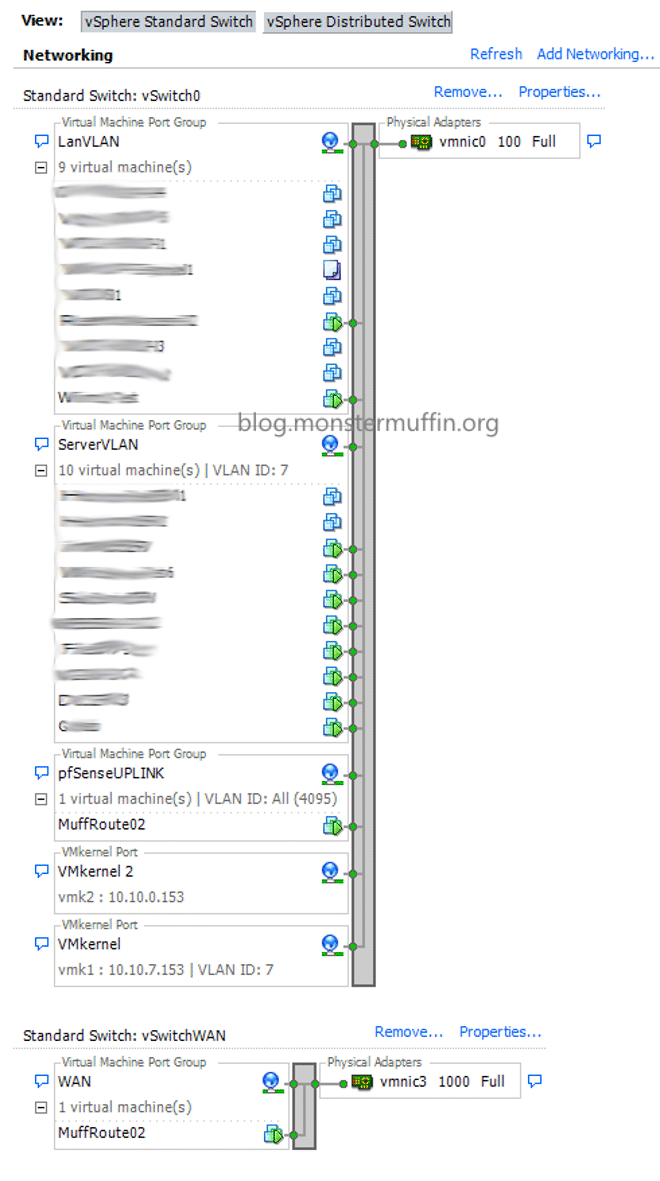

Switching inside VLANs is handled by the vSwitches inside ESXi and routeing is handled by the pfSense VM that has all VLANs tagged on its virtual interface, allowing it to communicate with all future and current networks. ESXi networking on this host looks like this in terms of pfSense:

You can see here that pfSense has 2x interfaces, one on vSwitchWAN which has NIC3 connected to it, this is my WAN connection that comes from the colo providers. The second NIC, connected to vSwitch0 connects pfSense to the virtual machines. I have the port group set to ALL VLANs which allows me to add VLANs as I please and then assign machines to that VLAN by creating a port group, you can see 2x VLANs already in the above image.

Vmnic0 is connected to my LAN VLAN and is connected externally to the iLO port of my server, this allows me to connect to my iLO of the G7, assuming everything is working as it should. If I can’t get to the machine I have KVMoIP provided for me so I don’t need the iLO to be publically accessible.

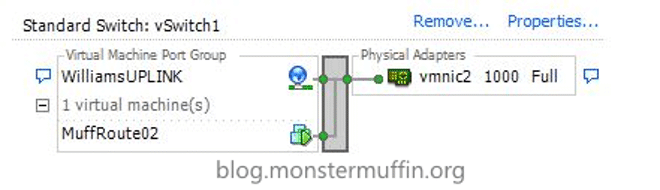

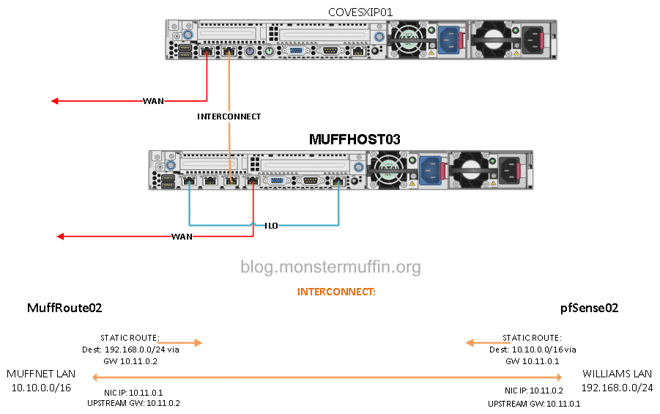

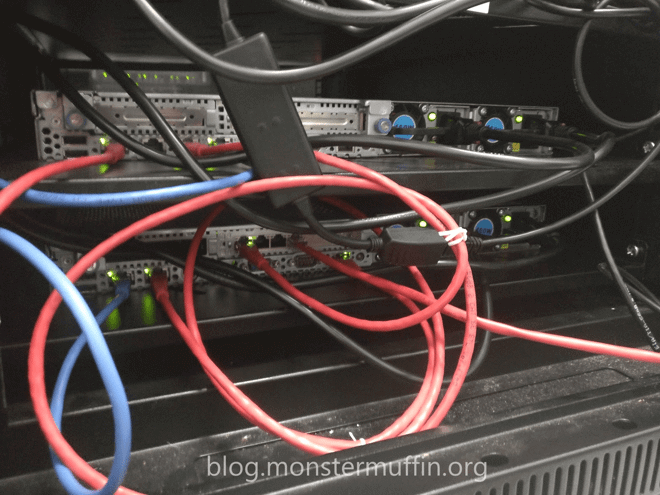

There is another virtual NIC connected to pfSense and this is an uplink to the other server that is sharing a colo plan with me. This link is primarily for a domain trust and for some failover traffic between the hosts. Below you can see how I achieved this as well as a diagram of the connections. The other server also runs pfSense to the interlink was pretty straight forward to setup. Here is the vSwitch+vmnic setup of the interlink:

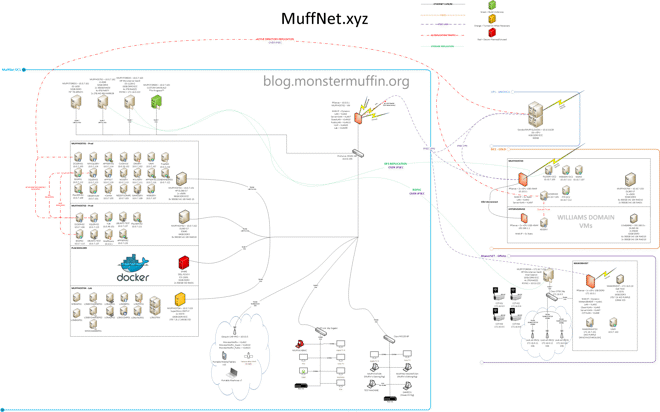

Here is a rough diagram of how the servers are connected in the racks with more detail put into mine (this is Muffins lab after all.)

You can see here that the two pfSense instances have interface IPs that are completely out of our ranges, as these are just used for the tunnel. Each interface has an upstream gateway set to the other machines interface. A static route configured to pass traffic to the remote subnets using this gateway. This method is the most straightforward and logical way I could see of interconnecting these two machines together and works extremely well.

The latency is extremely high for a directly connected interface but this is something I am looking into, the speeds over the link itself is gigabit during transfer tests so it’s not a huge issue right now. I suspect HyperV has something to do with this but the other host may be changing hypervisor soon so that issue may get fixed without my intervention (hopefully.)

As with the diagram above, this host is no longer running HyperV and is now an ESXi machine and the latency issues have been resolved.

With the network setup like this all switching is done internally via vSwitches, all routeing done on pfSense02, with static routes to Williams going over the directly connected interface and to DC1.MuffNet.xyz going over IPSec. Awesome.

I also have another IPSEC p2p instance on this pfSense VM that is connected to my VPS in America. You can see this documented in the diagram below. This is to let DC2 network with that VPS without having to go through DC1 as I do send some traffic to and from that VPS frequently.

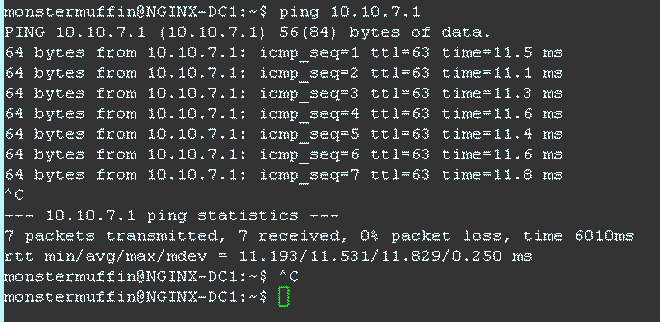

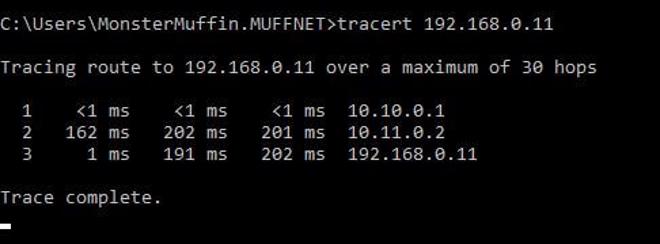

The IPSec link between DC2 , DC1 and my VPS was created as per my previous guide. As you can see here the latency over the link isn’t too bad at all which is nice:

11ms over a VPN over the internet is more than acceptable IMO, especially considering one end of this link is on a residential connection which, as we all know, have sub-optimal peering. The link also completely maxes out my home upload/download which is pretty sweet.

VMs/Pre-Requisites #

Once the hypervisor was setup I created some base VMs, such as DCSERV03 that will need to be synchronised with MuffNet. These were created beforehand as I had the server up and running next to my lab for a few days to ensure everything was fully synced and more importantly, backup up locally over the gigabit network.

If you’re planning on doing something similar I would highly advise doing the same. I took this time to copy templates over to the host from my current hosts so that builds don’t have to happen over IPSec.

Execution #

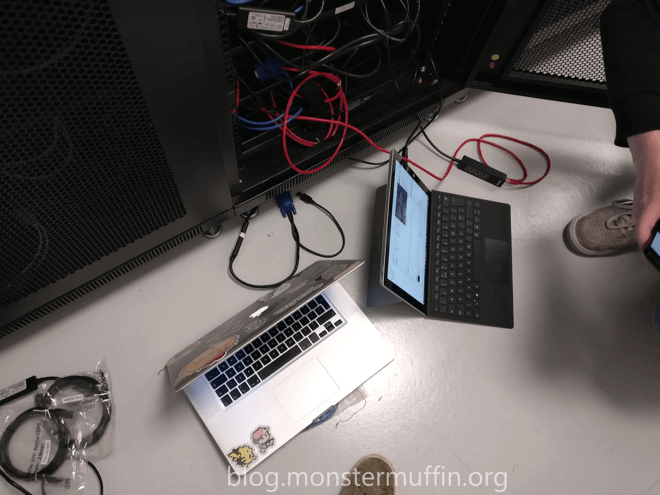

So once everything was setup we got in the car and drove down. The ‘datacenter’ is a small converted industrial unit, hence the cheap price, but appeared to be properly kitted out and looked good enough.

I hit a few snags setting everything up but that was to be expected, it turns out I had setup my IP range incorrectly, which was easily fixed by plugging in my laptop into the LAN port connected to my LAN vSwitch (used to connect iLO to LAN), an emergency port like this is highly recommended!

Once I changed the IP settings in pfSense everything came up and I was able to ping DC2 from DC1 on my phone, sweet!

Here are some sneaky photos of it all in the colo:

Conclusion & Future Plans: #

I’ve been running with this machine in colo for about 2 months now and I am extremely happy with it. It’s allowed me to move some heavy lifting/bandwidth intensive tasks/VMs over to that site, such as running VDI machines accessible on the web, and this blog/my music server, with much better results than my home connection.

I’m also using this site as my primary ‘on the go’ VPN from my phone and everything is a lot snappier due to the better peering than my home connection, and I can still access everything in all my sites.

I do backups every other day, which has been working out great. Every month I do a full backup and archive the previous month, this is all done with Veeam in this project. I have a 150mb download at my house so the backups go at full speed (due to them being scheduled at 2AM) which is around 18MB which is perfectly fine. I have been playing with Vmotioning VMs around and, as long as the VM isn’t huge, this also works pretty well as well even with my subpar upload speed at home.

What I’m currently working on is to add a 4U storage box over there to have a true cloud backup/replica of my data, since it will only need power I don’t see this being too much extra. This will allow for a proper offsite backup that is protected, secure, and all mine. Ideally, I’d like to host Plex there too but I currently use around 70TB of bandwidth on my home line, a lot of that from Plex, so due to bandwidth restraints I don’t think it would work out.

And finally, a picture of MuffNet’s new network diagram:

Thank you for reading! ~~MonsterMuffin