🎬 Intro #

As I age throughout this life, my priorities are changing. Some might dismiss this as paranoia, but it’s undeniable that our privacy is at greater risk than ever before.

Big tech and even governments using your data for, at best, targeted advertisements and at worse, straight up spying. This isn’t meant to be a political or philosophical post to debate this though, this post is simply about my push to ‘de-googleify’ my life.

I have long been using Google’s services for my day-to-day, as millions of others do, and this has been mainly split between a ‘free’ account and a GSuite Workspace account. The Workspace account is from back when unlimited storage was a thing using Google Drive and workspace for a nominal fee, which is no longer the case.

Regardless, I kept the Workspace account as I had grown to like the benefits of having it around, some of which:

- Email using my own domain.

- Aliases - Ability to create separate mailboxes at will which are linked to my main mailbox has been a godsend. I much prefer this to plus addressing.

- 5TB of storage - Whilst not a vast amount, was enough to store a backup of my most important data.

- No advertisements and ‘increased privacy’ - Workspace accounts are not subject to Ads being displayed. They are also subjected to increased privacy in how emails are scanned.

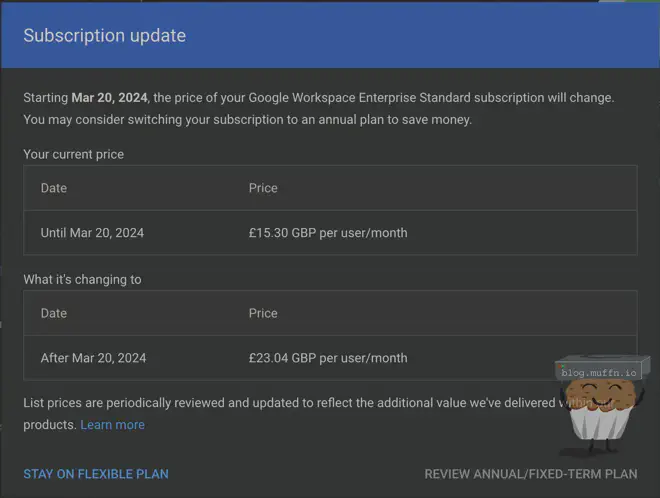

Even without the unlimited storage, I opted to keep the Workspace account for these reasons. It had been working pretty well, until one day I received the following:

On the face of it, this isn’t a big deal but it did trigger something inside my brain that made me think I can probably do this myself, and so, that’s what I did, or tried to do.

⚠️ Being Realistic #

I’m not going to say things like everyone needs to do this to keep themselves feeling safe, warm and fuzzy. The reality is, Google services are so widely used is because they work well, for free. Convenience will almost always win over, and Google services are damn convenient.

There are certainly pains leaving the status-quo and things will simply not be as streamlined, easy and accessible. This is a tradeoff I have decided I am willing to make, and I’d hazard a guess that if you’re reading this you fall into the same camp.

The thing with projects like this is, you can always decide to scrap everything and carry on as-is. You’re not ever tied to one way or another - you can be, but don’t have to be.

I have chosen to target 4 main aspects of my day-to-day that I use (or used to use) with Google, those being:

- Email (Gmail)

- Cloud Storage (GDrive)

- Photos (Google Photos)

- Location Tracking/Archiving

The goal of this post isn’t necessily to self-host everything, but to be more in control of my own data and not be completely and utterly fucked if Google one day decides to yoit my account.

📭 Email #

I won’t lie to you, email was by far the most difficult piece of this puzzle and requires the most tradeoffs.

The main drivers for wanting more control over my email were the following:

- Privacy, as previous.

- Price hike of workspace for features I don’t want.

- As my free gmail account had been in use for about 16 years, the sheer amount of shit the mailbox received was immense. Migrating to my workspace email was supposed to fix this.

- Security for the future, and by that I mean…

🔹 Own Your Address #

When you use an email service like Gmail, your email address is tied to that specific provider (e.g., [email protected]). If Google decides to suspend or terminate your account you could lose access to that email address and, consequently, any other accounts linked to it for password resets, notifications, etc. This is not only a security risk, but potentially life altering.

In contrast, when you own your email domain (e.g., [email protected]) and use an email hosting provider to manage your email, you retain control over your email address. Your email provider could cancel you all the same, but in this instance you would simply take your business to another provider and stand up the same addresses, and just like that, you have access to those addresses again.

Whilst Google account suspensions may be relatively rare, they can and do happen. Owning your email domain is, in my opinion, an important step to mitigate this risk.

It’s important to note that domain ownership is quite reliable. When you register a domain you are the legal owner of that domain for the duration of the registration period (typically 1-10 years, renewable indefinitely). As long as you keep your domain registration active and properly configured, it’s unlikely that you would ever lose your domain short of it being seized or something, just don’t forget to renew it…

🔹 Choosing an Email Provider #

Before I get into this, no, I did not even slightly consider self-hosting my email. I don’t want to deal with that and unless you know what you’re doing, I recommend you don’t either.

Now, there are several options for email hosting out there and they all offer different features across different price points. For me, what I was looking for in an email host was the following:

- Ability to host multiple domains/mainboxes without a pay-per user/mailbox/domain payment system. I want to be able to add domains and create user/mailboxes at will. For example, I want my Vaultwarden instance to be able to send from [email protected].

- Cheap-ish (yes, yes, I am cheap.)

- Standard protocol support (IMAP/POP3).

- Catchall & Plus addressing support.

- Aliasing support.

- Server-side filtering support.

- GDPR compliance.

There were two options that stood out to me the most, but first an honourable mention to Apple’s iCloud+ which allows you to bring your own domain. It didn’t fit my needs above, and I’m not leaving one tech giant just to join another, but for an easy, solid option for email hosting I think it’s a good way to go.

When looking at offerings, services such as Fastmail cropped up a lot and seem like interesting options, but the per user/per month pricing model was a hard pass from me. This is perfect if you know you only want 1/2 mailboxes/users though.

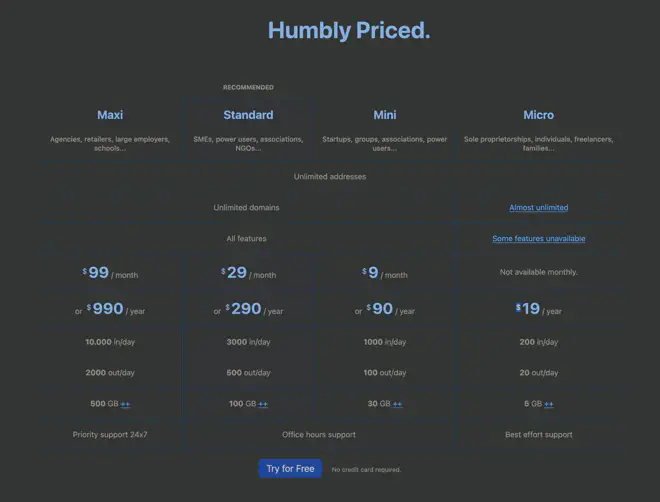

Anyway, the two options I ended up deciding between were MXroute and Migadu. Both of these options are attractive options that tick almost all the requirements I had.

MXroute has a quite impressive lifetime option, usually I would steer clear from any service offering a lifetime membership, especially something like email, but their reasoning for offering it seems acceptable enough.

You will notice that both offerings are pretty low on their mailbox storage, but both these providers are not limiting usage based on users/mailboxes/domains anything other than actual usage. I don’t send a lot of email, I have no need to and I don’t care for saving decades of most emails and if I did, I can do so locally.

Both MXroute and Migadu offer compelling pricing for their offerings, considering it’s purely usage based, but there were 3 main reasons for choosing Migadu:

-

MXroute is not officially GDPR compliant. That’s fine, they are US based and don’t have to be, and to be honest if that were the only difference it would matter less.

Note: This information is true at the time of writing, this may have substantially changed. -

Whilst they both have good pricing for their small tiers paid yearly, Migadu’s micro tier is $19. With limitations, of course.

-

Migadu have pretty good documentation on their features, their admin panel makes sense and they are honest and upfront about their service, as seen on their pro/cons list.

📥 Email - Take One - Migadu #

So, I started a trial with Migadu and around a week later had purchased the micro plan. Let’s go over some limitations:

🔹 Limitations #

🔸 5GB of storage across the account #

Seems small, sure, but it’s $19 a year. How much email do I really need to store in the cloud? After checking my Workspace email usage, I was using about 700MB which was an accumulation of several years, most of which was almost certainly trash.

🔸 ‘Almost unlimited domains’ #

An abuse measure which is reasonable. I added 4/5 domains in my first month with a few mailboxes.

🔸 ‘Some features unavailable’ #

Non issue for me as I don’t care about mailbox limits, I don’t need more than 1 admin (myself) and don’t have any users in the traditional sense to send welcome messages to.

🔸 Email limits #

Now this is the big one. The micro plan has a 200 in/20 out per day limit. Admittedly, this does seem extremely low and I can easily see this being an issue for some individuals, nevermind families, but for my girlfriend and I it’s fine.

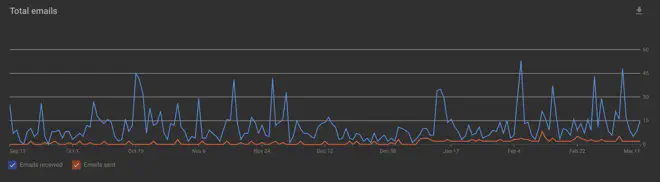

Like I said, I don’t send much email and this was confirmed by checking my Workspace stats:

Granted this is only one of my email accounts, but considering I was well under the micro limit over a period of a good few months I wasn’t worried.

If I ever run into issues with these limits, $9 per month/$90 per year for the ‘mini’ plan is still an easy buy, but I don’t foresee ever running into issues.

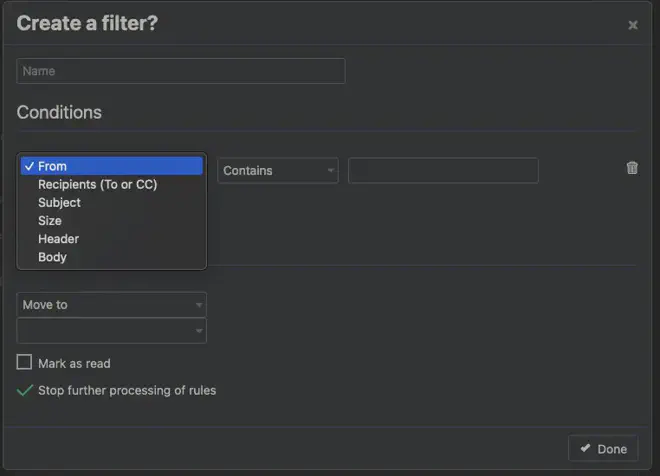

🔹 Filtering #

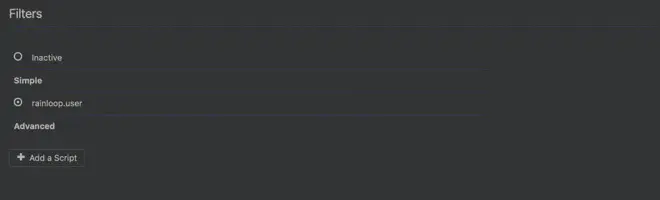

Filtering server side is not something I had considered too much when I started thinking about this and I quickly learned that I was a bit out of my depth when looking at how to do this.

Migadu uses the sieve filtering language and the only place I have found to do this is via the online webmail in the settings.

I did find a sieve extension for Thunderbird, the last release being in Nov 2021 and does not work with recent versions of Thunderbird, which I need to run on ARM64.

When I found the setting in the webmail and clicked the Create Script button I instantly thought, ah fuck.

Yeah, I didn’t know where to start with this and put it off for a few days. I was surprised how little good information there was on the internet about writing scripts.

I decided I’d send a grovelling support request to Migadu asking if there were other ways to configure sieve because, at the time, that sounded better than actually reading the rfc when I noticed a button in the webui.

Confirmed. I am a simple user 👍.

This radio select for some reason called rainloop.user allows some simple scripts to be created in the UI, and even better, can show the rules as script.

A generated filter looks like this:

/*

BEGIN:FILTER:3141592653589793238462

BEGIN:HEADER

eyJJRCI6IjMxNDE1OTI2NTM1ODk3OTMyMzg0NjIiLCJFbmFibGVkIjp0cnVlLCJOYW1lIjoiU3

RlYW0gPiBTdGVhbSBGb2xkZXIiLCJDb25kaXRpb25zIjpbeyJGaWVsZCI6IkZyb20iLCJUeXBl

IjoiQ29udGFpbnMiLCJWYWx1ZSI6Im5vcmVwbHlAc3RlYW1wb3dlcmVkLmNvbSIsIlZhbHVlU2

Vjb25kIjoiIn1dLCJDb25kaXRpb25zVHlwZSI6IkFueSIsIkFjdGlvblR5cGUiOiJNb3ZlVG8i

LCJBY3Rpb25WYWx1ZSI6IklOQk9YL0FjY291bnRzL1N0ZWFtIiwiQWN0aW9uVmFsdWVTZWNvbm

QiOiIiLCJBY3Rpb25WYWx1ZVRoaXJkIjoiIiwiQWN0aW9uVmFsdWVGb3VydGgiOiIiLCJLZWVw

Ijp0cnVlLCJTdG9wIjp0cnVlLCJNYXJrQXNSZWFkIjpmYWxzZX0=

END:HEADER

*/

if header :contains ["From"] "[email protected]"

{

fileinto "Accounts/Steam";

stop;

}

/* END:FILTER */

It would be nice to be able to manage the server-side filters from Thunderbird/Betterbird one day, but this works just fine.

I haven’t been able to move emails into folders more than 2 deep, I’m not sure if this is an issue with Migadu or myself.

🔹 Security Considerations #

IMAP and POP3, the standard protocols used by most email providers, lack the built-in authentication mechanisms that other big providers offer in-app like Google and Microsoft. As their apps are proprietary they can add extra measures to access your email through their apps, this cannot happen with IMAP meaning securing your email account requires extra measures.

One significant issue with IMAP and POP3, being the ancient protocols that they are, is that passwords are transmitted in plain text, making them vulnerable to interception, amongst other things.

Migadu’s webmail frontend supports 2FA, which adds an extra layer of protection to your account when accessed via the web. The issue with this, however, is that the same password is used when accessing via IMAP/POP which, as previous, cannot have MFA natively. If someone has your password they would be able to access the account via these protocols and there’s not much that can be done about it natively.

🔹 Migadu’s Service #

Overall I was happy using Migadu and things worked as I expected. I used the web frontend for email much more than I initially anticipated due to how lackluster I found most MacOS email clients.

I only ran into outbound limitations once, when I allowed Overseerr to send emails and it went a bit insane, I decided that wasn’t important so just removed the config.

Shared mailboxes worked well too, for the price it really was everything it promised to be, but..

🌜 Email - 9 Months Later #

I had this email setup running in December, started writing this in January, it’s now September.

It worked well, I cannot deny. I only had 1 instance of emails not being sent/bouncing back which I had to fall back to my Gmail account, otherwise it did what was advertised.

My only gripes, and this will sound a bit stupid considering my spiel so far, is that it was too basic in that I wasn’t feeling very productive with my workflows. Having just SMTP authentication standing between anyone and all my emails was also meh.

🔐 Email - Take Two - Proton #

When I started looking into the offerings, I had initially completely disregarded Proton services as I would need to use their own proprietary apps for mail on Android/iOS, and now I see that may have been a mistake.

After 7 months of grappling with less-than-ideal email apps, I realized Proton’s limitations were actually quite manageable for my needs. I decided to give their services a try.

After all, it doesn’t really matter if their apps are proprietary, as long as they work well and add more security to standard IMAP, which of course their service does.

🔹 Proton’s Offering #

Firstly, if you somehow don’t know about Proton, pause here and go do some quick reading.

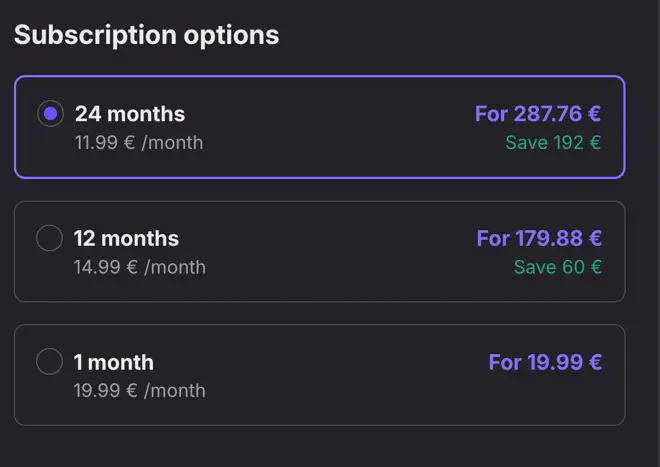

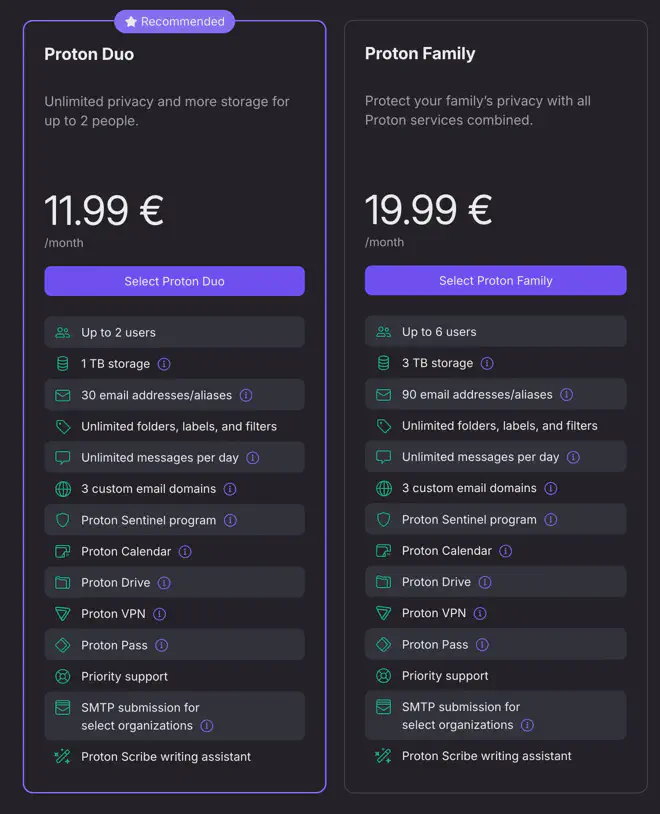

Proton’s offerings are simple, a free plan, ‘Proton Unlimited’ which I am currently testing was testing, a family plan, and a newly introduced duo plan.

After testing the unlimited plan for a small amount of time, I ended up biting the bullet and going for the Duo plan for myself and my partner. Turns out procrastinating on this worked in my favour, as I was planning to buy 12mo of the family plan but the Duo plan came out of nowhere and if purchased for 12mo, comes out to $11.99 per month, forever, so I just did that.

Proton is a lot more expensive, 1 year of Migadu is 2 months of proton, but there is a lot involved in their offering.

🔸 500GB/1TB/3TB storage #

500GB for the unlimited plan, 1TB for Proton duo and 3TB for the Proton family, this is a pretty reasonable and a good way to get some more ’easy’ storage for sharing files with family etc.

🔸 15/30/90 mail addresses #

I initially had grandiose plans of all my self-hosted services using their own email addresses to send out and in reality, I only have around 5 of which only about 2 are absolutely necessary. Outside these services, there are a handful of named accounts, and a few others totaling 13 mailboxes/addresses. So, the ’limitation’ of 30 addresses is a non-issue for me. Simplelogin allows me to work around this anyway if required (for recieving, not sending).

🔸 3 custom email domains #

Whilst being by far the biggest limitation compared to Migadu, I only use 3 domains. If I ever need more domains, I can always use the micro Migadu plan for that.

🔸 Simplelogin #

Simplelogin is included with paid plans, with unlimited aliases. This is a direct integration and addresses the requirement to have aliased emails for services.

🔹 Proton’s Apps #

So, this is the biggest reason for going to Proton route. Without any custom implementations the only thing that stands between someone and your email is a password and that really sucks. There isn’t much that can be done about this without redesigning the entire protocol, SMTP is very old.

That being said, the Proton apps are a bit of a mixed bag, with most of my usage on their email app. The email app, in my opinion is pretty good. I’m not someone that conducts a lot of business over email so my use case isn’t the best for a proper overview, but for my use it’s been good. The web apps and mobile apps follow a similar layout and it’s easy to use both.

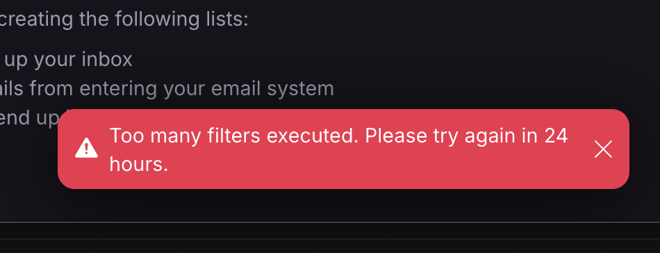

Filters work just as expected, however I have run into the following issue several times which has sucked:

Now, their other apps are questionable. For example, their drive app is not as feature rich as Seafile so I have no real use for it, despite having 1TB of shared storage, I guess I will end up using this as a cold backup for some encryption keys and the latter but otherwise, this will end up just being email storage which should last several lifetimes.

Proton calendar on the other hand is just ..bad. I want it to be good so I can fully switch but at the time of writing, it needs more time in the oven. For example, on Android there is no integration into the system, so clicking on anything calendar related will not bring up Proton calendar as an option, which is basic functionality.

I can’t speak to Proton Wallet/Pass, I have absolutely 0 interest in storing BTC with Proton or replacing my Vaultwarden instance.

Proton VPN works okay, certainly seems to be quantity over quality. I notice a lot more CAPTCHAs and generally seems slower than a certain Swedish company but it’s included in the price so would be silly to not use it. Proton’s VPN is generally not as fast when tunneling P2P either.

🔹 Proton Migration #

Proton mail has an import tool Easy Switch that I found worked well across various accounts. I just slapped in Migadu’s SMTP details and it pulled in everything, my Workspace account was even easier as they have built in integrations for larger providers.

One thing that isn’t possible with Proton that I had been using with Migadu was shared mailboxes. This has been requested but doesn’t seem to have much movement, I don’t really understand how a provider can have a family plan and not support shared mailboxes? Is this the case with enterprise users too? Surely not. Anyway, for now I am using simplelogin to forward a specific alias to separate mailboxes which is an imperfect workaround.

🔹 SMTP Access #

One slight caveat I learned whilst setting the account up is that SMTP access for sending outbound via your account is not enabled by default. The docs say you must contact support with a use case and how many outbound mails will be sent, which I did and was granted access the next day.

This does makes sense from an abuse point of view, but something to note.

🔹 Proton’s Service #

I’ve only been using Proton services properly for about a month at this point, but I’ve followed the company and offering from the start. It’s a confusing company to me, to be honest. I support their mission but from my perspective they seem to be spreading too wide and losing focus; incomplete apps and services whilst working on a bitcoin wallet seems, to me, an odd choice but I will see how it plays out.

While only time will tell if Proton remains a good fit, my first month using their services has been largely positive. I have already paid for 2 years so will be using it for at least that long.

But for now, I am happy enough. My emails are now being sent to/from my new addresses, and domains from workspace were moved to my Proton account.

💾 Storage #

I have two requirements for file storage:

- Backing up my backups to the cloud.

- ‘Cloud drive’ instant access to files and easy sharing from the web or using native apps, from any device. These files should not live on the device.

🔹 Cloud Storage Providers #

One thing I do read a lot on the internet is people, rightfully so, discussing the implications of putting your files on random cloud providers servers and simply trusting they are not doing anything naughty and/or ensuring their security is not an after thought. The data you put in is at the behest of the provider.

The thing is, it’s easy enough to negate most, if not all, of these worries. As long as the files are put onto these services encrypted, and the encryption isn’t weak/broken, it probably doesn’t matter that much how the data is handled - availability becomes the main issue. This is why all my cloud data is pushed using rclone crypt.

There are too many cloud providers out there and they all offer varying levels of security, features and ’trustworthiness’. At the end of the day, it probably doesn’t matter what you go for. For me, it’s a Hetzner storage plan.

I like Hetzner. I use some of their ARM64 VPSes for some apps as they are cheap and reliable. When I saw they did storage plans for reasonable prices I was interested.

Their plans are cheaper than anything I had seen so far, and natively supported features that make it an instant win for me:

- rsync support

- Restic support

- Snapshots

- Sub-Accounts

- No minimum contract

- No network limits

- Cheap af

- Scalable

I purchased the 10TB plan which is €25ish per month.

When Google killed unlimited storage, I had to stop storing backups in the cloud and selectively backup what fit in the 5TB limit. 10TB comfortably fits my requirements with space to spare.

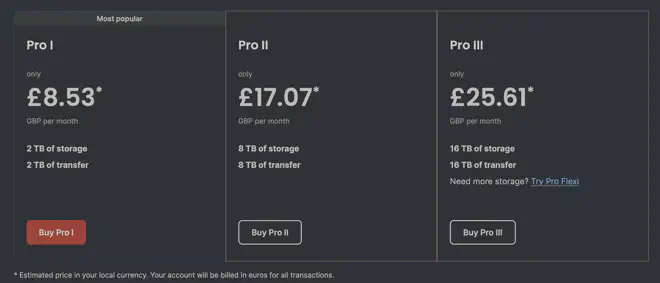

One other option was mega’s pro plans which are suspiciously cheap. They do have a transfer cap, but this seems to be equal to the capacity, so seems fair enough for backup purposes.

I may decide to switch to mega for the savings at some point, but for now I am happy with Hetzner.

🔹 ‘Live’ File Storage #

Most providers in the cloud storage game prioritize convenience over security meaning most providers encrypt your data at rest on their servers. This means that the files are encrypted when stored on the provider’s servers, using keys managed by the provider. While this can protect data from being accessed if the physical devices are compromised or from a data breach, the provider still has the ability to decrypt your files for various purposes, such as indexing for search, generating previews, or complying with data requests.

To ensure true end-to-end security, the client application must encrypt the data before uploading it to the cloud, using a key that you control. This client-side or end-to-end encryption is not widespread. Google does have an option for enabling end-to-end on higher tier Workspace plans and iCloud does do it if you specifically enable it.

This is all fine and dandy, but you still have to trust the provider’s implementation. It isn’t always a perfect solution either as, for example, you can no longer access your files on the web with iCloud’s implementation as they don’t have your keys.

As usual, there are quite a few FOSS solutions to do this, the biggest one being of course Nextcloud. I am not a huge fan of Nextcloud and all the modules it offers, I just want file storage with simple sharing and the ability to have file streaming on MacOS/Linux.

The main contenders for me were Owncloud infinite scale and Seafile. I ended up choosing was Seafile.

High performance file syncing and sharing, with also Markdown WYSIWYG editing, Wiki, file label and other knowledge management features.

🔹 Seafile #

Seafile provides access to all my files through its app or web interface on any device.

In my setup, the data for Seafile is volume mounted on an encrypted share, which is backed up via rclone with crypt, giving me full encrypted backups in the cloud.

Seafile is pretty fast, and that’s mostly because instead of storing whole files, Seafile uses a block-based storage system, splitting files into smaller blocks with each block being stored as a separate file in the backend storage. This enables Seafile to efficiently sync changes and handle file versioning, as only the modified blocks need to be transferred and stored.

What this means, however, is that you cannot browse the backend of Seafile without Seafile. This is an understandable problem for a lot of people but a trade off I am fine with. As long as the data is backed up, I can always spin up Seafile again and point it to the data. Seafile has a good page on backup and restore as well as FSCK tools, which allows for repairing corruption of its block system and exporting of (unencrypted) libraries without requiring Seafile itself and its database.

It is worth mentioning that whilst Seafile is open sourced, they do have a paid tier and some features are locked behind this, one of the main ones being automatic garbage collection. This is a non issue for me though as you will read below. Note that up to 3 users is free for the pro edition however, which is pretty dope.

🔹 Data Flow #

Here is a flow of my data backup/access:

It might seem complicated but it is rather simple in practice.

Seafile has become the frontend for data access, replacing the Google Drive app on my devices. It allows me to have the same files, updated, wherever I am on whichever devices with versioning.

Seafile on MacOS uses Apples native APFS File Provider API allowing for ‘virtual drive’ usage, which is exactly how Google Drive and most other applications work and it works seamlessly. This is obviously available for Windows too. I haven’t had any firsthand experience with the Linux client, but once my Framework arrives I will be daily driving Fedora, so we shall see.

Seafile runs containerised in a VM, using Docker at one of my sites with a 3Gb/3Gb connection, so link speed isn’t an issue. Seafile’s backend is volume mounted via NFS using Docker’s native NFS driver to an encrypted ZFS datastore.

Seafile is deployed and managed with a third party docker deployment that is much easier to manage, and they state it is a “complete redesign of the official Docker deployment with containerization best-practices in mind.”

I highly recommend using this deployment for Seafile.

A fully containerized deployment of Seafile for Docker, Docker Swarm and Kubernetes.

The Seafile data is then backed up 3 times.

- The first time, a nightly rclone one-way copy to my Hetzner storage account using a subaccount to silo this data off. One-way copy ensures data loss via accidental deletion or data corruption does not get copied.

- A second time via a nightly two-way sync to an encrypted NAS share at my home via my S2S Wireguard mesh.

- Then finally a third backup, a weekly one-way copy from my home to my NAS at a friend’s home, again part of my Wireguard mesh.

Similarly, machine backups have the same approach via a slightly different path. Mine/my girlfriends machines back up via Restic/Timemachine to my local NAS, which does the same first, second, and third backup strategies as the Seafile data. This data is again under a separate subaccount to keep it silo’d on the Hetzner side.

Hetzner is configured to take monthly snapshots, this is for the entire storage box. Snapshots are accessible via /.zfs/snapshot. More on Hetzner snapshots here.

🔹 Cloud Migration #

Recently, I published a post about backing up Synology DSM via rclone to a cloud backend.

Migrating from this to my new Hetzner backend was trivial.

On the Synology side, I just had to change the remote config in rclone.conf to my new configuration. This by itself would be enough, as the next run of my backup script would see an empty directory and start uploading everything, but this would peg my upload for a while and I decided to manually move the data myself.

For less than 1 pound I rented a Hetzner ARM64 VPS and did a cloud > cloud migration using rclone, taking the opportunity to change to new encryption keys on the new backend. Rclone currently requires downloading and re-uploading all data to change keys on a backend as a backend has no compute, this must be done locally.

And with that, I have replaced Google Drive and have confidence in my backup strategy. I am missing apps such as Docs, Sheets etc. Had I required this functionality, Nextcloud would have probably been more favorable.

📸 Photos #

🔹 Immich #

Immich. There are other options out there, but Immich is the Google Photos replacement in my opinion. PhotoPrism looks good too, but I haven’t tried it as Immich has the better feature set at the time of writing.

High performance self-hosted photo and video management solution.

There are other options, such as Ente Photos but Immich, for me, seemed like the solution to go with. It was great to see the Immich team join FUTO.

Ultimately, the beauty of this setup is its flexibility. If Immich doesn’t work out long-term, I can easily migrate my data to another application. It might take some time, but the key takeaway is that I’m not locked into a single provider.

🔹 Transition Period #

Now, I must preface this by saying I am still using Google Photos. I’ll likely continue to as explained in the thoughts section below.

It’s important to note that the Immich team advises against using it as a sole data backup solution until they reach a more mature production phase.

As well as not being production ready, at the time of writing Immich has an absolutely massive missing feature for my use case…

🔹 RAW & JPEG #

Currently I have duplicates of all photos that come from my phone in Immich, due to the fact that I save the RAW version as well as JPEG. I could just not, but I don’t want to not.

This limitation has been a major frustration for me and the stacking feature has been on the radar for a while, but until it’s officially implemented Immich isn’t usable to me full time, even though I do continue to ingest media into it.

It’s very likely that by the time you’re reading this stacking has been fully implemented as it seems close as of now, in which case wooo! 🎉

🔹 Photo Flow #

My flow for ingesting and backing up mine and my girlfriend’s photos is as follows:

Again, this may seem quite convoluted, but it’s almost identical to the flow from before. Photos taken on mobile devices are backed up using the Immich app to my Immich server. This is done in the background like with Google Photos on both Android and iOS. The images are ingested and stored locally on an encrypted ZFS datastore, which then follows a replication scheme to other sites and the cloud.

Photos taken on my camera/drone/scanned from film (yes, I do occasionally use film) are ingested locally at my home and stored on my NAS. The backup for this data is identical to the previous flow, with the exception that image data ingested from my home is not sent to the cloud directly, but after it has undergone a two-way sync with the main site, this is to prevent any clashes.

🔹 Thoughts #

Google Photos was certainly the big one and there are several hurdles I simply cannot overcome. I only used Google Photos for my phone pictures, so implementing Immich which now takes care of my entire library was a great solve, I was always hesitant to upload my DSLR images into GPhotos as I found it was harder to organize and my DSLR library would quickly fill several TB which I would need to pay for.

On that front, Immich has been everything I had hoped for and continues to improve, it does feel like an upgrade for me.

Now, the not so good bits are all the social features of Google Photos. Being able to quickly and easily have shared albums between anyone with a Google account is irreplaceable at this point.

I will continue to use Google Photos as well as Immich for the foreseeable future but I am happy I have this setup, I’ve been using my DSLR much more now that the photos are much easier to peruse through via an app, so wins all round.

📍 Location Tracking #

Location tracking was actually one of the easier things to migrate from. I had already disabled this on my account despite how useful I find it and so didn’t have any data to migrate.

In retrospect, I should have done a full export via takeout and then disabled and removed anything, but for some reason I didn’t. I have been fortunate to travel to most continents, most of the time with a phone so having this data would be very nice, alas.

How I’m doing location tracking and logging is rather simple, and as usual, requires some impressive open-source software.

I use Home Assistant for more than a handful of automations at home, the Home Assistant app is essential for me and my girlfriend, and it is always sending our locations to HASS for various reasons. Since Home Assistant is already polling my phone, this data can be sent somewhere to be logged long term.

🔹 Owntracks #

Owntracks is that somewhere.

Store and access data published by OwnTracks apps

Owntracks has two modules, the recorder which takes in location data from HTTP or MQTT and stores it in text files, it also serves this data to whatever via an API.

I am using a Node Red flow in Home Assistant to push location updates from the Home Assistant app on our phones, to the recorder. This data is formatted in Node Red to support the recorder’s format, and pushed via HTTP POST internally across my WAN to the recorder.

Owntracks has their own app you can run on iOS/Android that sends the data to an exposed recorder, but this way I only have 1 app that is constantly polling my location, which is better for battery life, although this does have a major drawback.

The other piece is the frontend:

🌍 Web interface for OwnTracks built with Vue.js

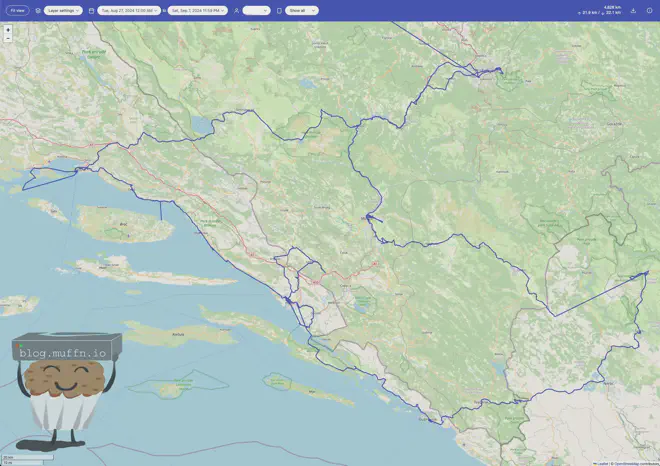

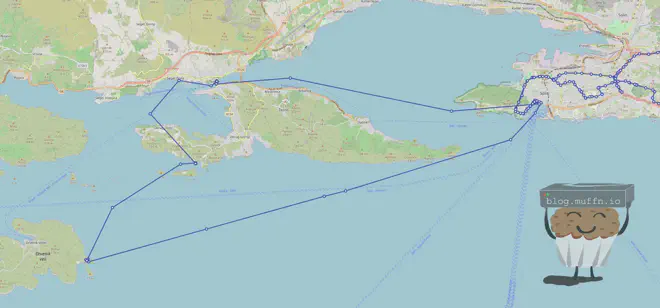

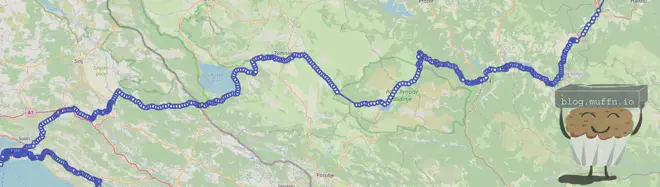

The frontend makes the data useful, allowing me to visualise this data, like so:

The detail captured in these kinds of logs when visualised is amazing, it’s very accurate and can be used to acertain where you were at a certain time with good accuracy.

There are several issues with this system though.

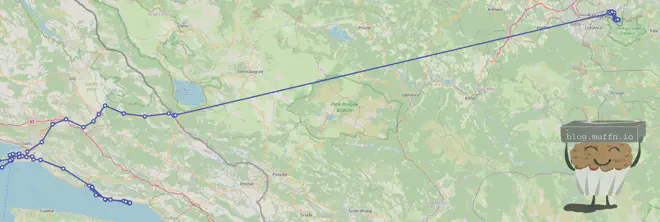

iOS seems to be handicapping Home Assistant’s poll rate making data only somewhat useful. This very much seems to be an iOS thing and not Home Assistant. iOS is much stricter with how often Home Assistant can poll data, usually location updates are only available when there has been a ‘major location change’ or something alike.

This is how my girlfriend’s iOS device reported this leg of the journey:

Compared to my Android device:

It usually isn’t as egregious as this example, I had my phone plugged in the whole time and she doesn’t remember what her phone was doing, but the discrepancy is always there. I’m not sure if anything can be done about this currently, but it works enough as is.

And the main issue… this is not an offline solution.

That major drawback I mentioned is a big one, my implementation doesn’t allow for any location logs to be saved when Home Assistant isn’t reachable, HASS doesn’t care where you’ve been, it cares where you are. This is where the offical recorder app would be better suited, as it has the ability to cache location data when offline, and send this data when it’s able to do so.

Having two location apps draining my battery especially when I’m on holiday is a bit 💀. I’m considering a few options instead:

- Use as is and when I care about tracking most (holidays), use the recorder app. This is probably the best way but requires manual intervention on devices and stopping the Node Red ingress.

- As above but use a physical GPS recorder and import the data manually, something like a Garmin eTrex. This is a good option, but then it’s another thing to remember to bring everywhere. There’s no chance I leave my phone going out even on small excursions. That being said, having a GPS device is probably required for more adventurous excursions we want to do, Iceland for example but this is for fringe cases.

- Whilst on holiday, Tigattack and I discussed making Owntracks the source of truth for location data. So HASS would get real time location data from Owntracks, and Owntracks would get cached data in the event signal was lost. This has more complexity but if he gets something up and working may be the way to go.

I’m unsure how to move forward with this, for now leaving it as-is is perfectly fine though.

Overall, replacing Google location history has been a success and it’s great having all of this locally. Whilst Owntracks has been good, it isn’t without its flaws.

Owntracks storing data into a text file backend and rendering everything in browser means it is slow. Viewing too much data will kill the browser tab and ’too much data’ isn’t all that much. This hasn’t been a huge deal for me so far but unless a rework is done it’s not viable for anything more than filtering and viewing short periods at a time.

🔹 Dawarich #

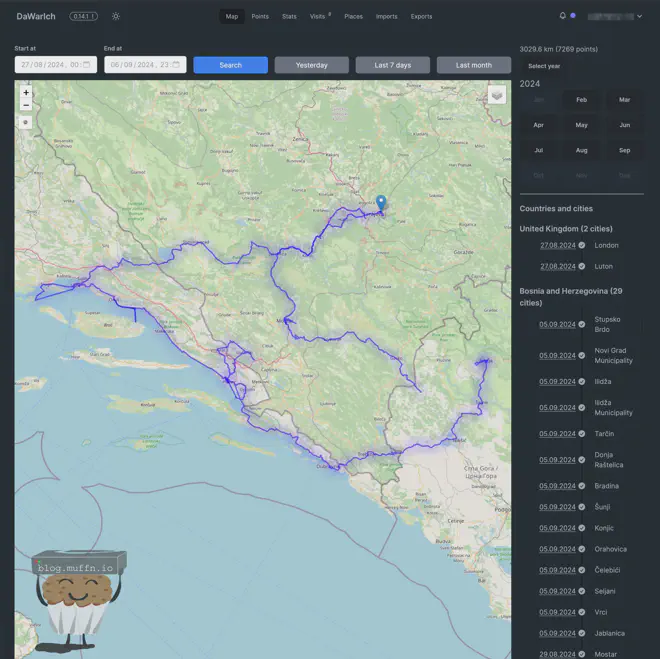

Dawarich is another app I’ve been testing, and it’s been very impressive. It is very actively developed, looks great and is much faster than Owntracks.

Self-hostable alternative to Google Location History (Google Maps Timeline)

I have been sending my data to both for a short while as of writing, and imported my past Owntracks data into Dawarich. Running both of them in parallel is costing me nothing and allows me to continue testing both tools as they mature, but Dawarich is my main goto for frontend now.

Dawarich has a way to go to be ‘fully featured,’ but it does look great, already having some awesome views.

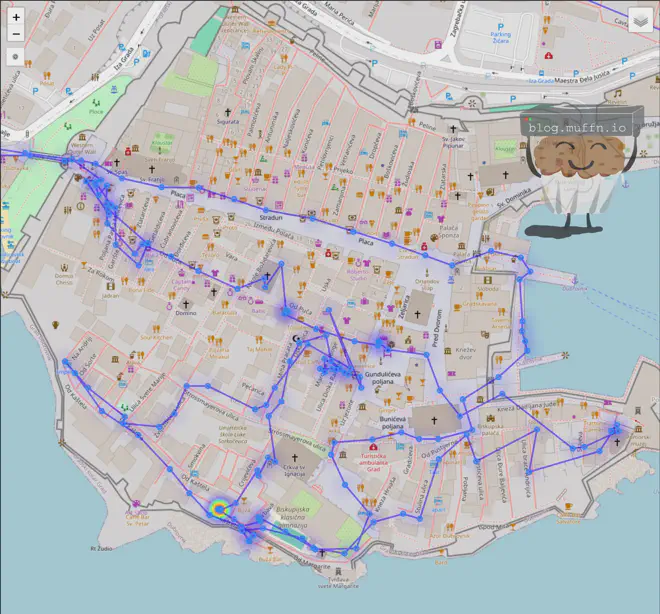

The heatmap view is cool too, showing exactly where I stopped or lingered.

The ‘biggest’ downside compared to Google Maps timeline is that Google was able to tell you exactly where you visited down to the exact restaurant, for example, and this is obviously not possible easily with FOSS tools. The location information is all there though, so it’s easy enough to extrapolate if the time and date is remembered. A small price to pay as far as I’m concerned.

Overall, I’m happy with the way these tools are being developed. Even if this wasn’t the case, having the data itself is the most important thing. These applications are the odd few that are not exposed to the internet, not now anyway as I see no need for it.

👾 Other Battlefronts #

These were big hitters I wanted to focus on whilst writing this post, but are several smaller things worth mentioning.

🔹 Firefox #

I started using Firefox when they were the first browser to get tab support, before Google Chrome even existed. I eventually did switch to Chrome for some years, but have been solidly on the Firefox train for 5 or so years again, and I can’t say I’ve ever thought about switching to anything else.

Firefox on mobile isn’t the fastest thing, but it allows me to have my extensions and keeps everything in sync.

🔹 FOSS Android Apps #

For mobile apps, I’ve been gradually replacing stock and Google Play Store apps with open-source alternatives from F-Droid (where possible). It’s amazing how many quality FOSS apps are out there, often without any ads or tracking. Apps that I personally use and recommend are:

Android client application.

A Material Design Weather Application

Source code for 2FAS Android app

Offical FUTO Keyboard Issue Tracker and Source Mirror of https://gitlab.futo.org/keyboard/latinime

K-9 Mail – Open Source Email App for Android

VLC for Android, Android TV and ChromeOS

🦭 Video/Audio Downloader for Android, based on yt-dlp, designed with Material You

Mirror only. Official repository is at https://git.zx2c4.com/wireguard-android

Material Design file manager for Android

:iphone: Home Assistant Companion for Android

And a bunch of others if you want to explore here:

A list of Free and Open Source Software (FOSS) for Android – saving Freedom and Privacy.

I don’t have any advice for you if you are on iOS, but Sideloady always looked good:

Sideloadly - iOS, Apple Silicon & TV Sideloading

🔹 Analytics and Tracking #

On this blog, I use Umami analytics which is a privacy-friendly service for tracking my view counts and trends. There is no personal data collected and is cookieless. I used to use GoatCounter but which was also very good but Umami is a lot more feature-rich with a better UI.

🔹 GrapheneOS: The Road Not (Yet) Taken #

I’ve toyed with the idea of using GrapheneOS full-time as I’ve tested it out before and it’s impressive. However, I haven’t been able to make the switch due to Google Wallet. I’ve become reliant on phone contactless payments, and losing that functionality is a trade-off I’m not quite ready to make. Sometimes I just want to leave the house with just my phone, and Wallet allows this.

In my (albeit not full) testing, I was surprised by just how much worked seamlessly with GrapheneOS without having to fiddle around too much. The obvious things like banking apps were blockers, but this isn’t necessarily a deal-breaker. The increased permissions and sandboxing of applications are very interesting features of GrapheneOS.

💭 Closing Words / Is It Worth It? #

I knew this wouldn’t be easy; Google’s services are popular for good reason – they’re intuitive, interconnected, and for the most part, they “just work.” Trading this convenience and interconnectivity for what I am assuming is better privacy, sometimes felt rather pointless in the grand scheme of things.

🔹 The Challenge of True Privacy #

The question, “is it worth it” becomes quite hard to answer. On one hand, I’ve gained a deeper understanding of the tools I use daily and how my data moves between them, this is now mine to control, as it always should be.

On the other hand, privacy improvements are hard to quantify. Do I feel more secure? Yes, marginally. Does it matter? I don’t know. This is but a drop in the ocean of data that’s collected, analyzed, and exploited every day.

In a world where data breaches are commonplace, where governments engage in mass surveillance, and where big tech companies profile us in ever more intrusive ways, the notion of individual privacy can feel non-existent.

The fact of the matter is, even as I’ve taken these steps I know that my data is still being harvested and monetized in countless ways beyond my control. My browsing habits, my location history, my social connections - they’re all valuable data points in the vast machine learning models that shape our digital experiences and, increasingly, our real-world experiences.

🔹 Reclaiming Control, One Byte at a Time #

It’s a bleak picture, and it’s easy to feel powerless in the face of these systemic issues that seem to get worse as time passes. But I don’t think the answer is to resign ourselves to being just another data point. By taking control of your data where you can, by supporting privacy-respecting services and advocating for stronger data protections, we can slowly reclaim our data and ensure it isn’t used against us. It’s not a perfect solution, but it’s a start.

So, is it worth it? I believe it is - not just for the marginal gains in privacy, but for the shift in mindset it represents. By reclaiming control over our data, we’re reminding ourselves and each other that we are more than just data points to be harvested and sold.

We are individuals with the right to privacy, agency, and dignity in our digital lives.

Thank you for reading;

Muffn_ 🫶